Learn how to create your own ChatGPT-like chatbot with this comprehensive step-by-step guide. From setting up the development environment to fine-tuning and deploying your model, discover how to build an intelligent conversational agent.

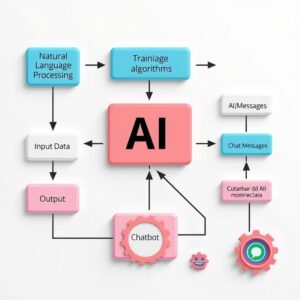

Artificial intelligence (AI) has transformed the way we interact with machines, and conversational AI models like ChatGPT are at the forefront of this revolution. By leveraging machine learning and natural language processing (NLP), these models can understand and generate human-like text, making them ideal for applications like virtual assistants, chatbots, content generation, and more.

Creating your own version of ChatGPT may sound complex, but with the right resources, you can build a chatbot that mimics the capabilities of ChatGPT. In this comprehensive step-by-step guide, we will walk you through how to create your very own ChatGPT-like chatbot, from setting up your environment to fine-tuning your model and deploying it for use.

Introduction to ChatGPT

ChatGPT is a language model developed by OpenAI, designed to understand and generate human-like responses in natural language. It is based on the GPT architecture (Generative Pre-trained Transformer), which uses deep learning techniques to process and produce text.

The model was trained on diverse datasets, enabling it to hold conversations, answer questions, summarize content, write articles, generate code, and much more. While OpenAI hosts and maintains the official ChatGPT version, you can create a similar chatbot using open-source frameworks or APIs provided by OpenAI.

Creating your own version of ChatGPT allows you to customize the model to suit specific needs, from customer support to educational tools and entertainment applications. This guide outlines the process of building your chatbot, starting from setting up your development environment to testing and deploying it.

What You Need to Get Started

Before you dive into the process of creating your ChatGPT-like chatbot, it’s essential to gather the necessary tools and resources:

- Programming Skills: A basic understanding of Python programming and machine learning concepts is crucial.

- Cloud Platform/Server: You can run the model on your local machine, but using cloud services like AWS, Google Cloud, or Microsoft Azure will help with scalability and computational resources.

- Machine Learning Libraries: Libraries like Hugging Face’s Transformers, PyTorch, and TensorFlow are essential for NLP tasks and building custom models.

- Pre-Trained Models or APIs: If you’re using GPT-3 or GPT-4, you’ll need access to OpenAI’s API. Alternatively, you can use open-source pre-trained models available on platforms like Hugging Face.

- Data for Fine-Tuning: To create a chatbot tailored to your use case, you’ll need conversational datasets or dialogue datasets to train or fine-tune the model.

Step 1: Choose the Right Model Framework

The first step in creating your ChatGPT-like chatbot is selecting the appropriate model framework. This choice determines how the chatbot will be built and how powerful the AI model will be.

- OpenAI GPT Models: If you’re looking for a highly advanced language model, you can use OpenAI’s GPT-3 or GPT-4 via their API. These models are powerful but come with usage costs.

- Hugging Face Transformers: If you prefer an open-source solution, Hugging Face provides access to various transformer-based models, such as GPT-2, GPT-Neo, and GPT-J. These models can be fine-tuned for specific use cases and are free to use, though you’ll need more computational resources.

For this guide, we’ll focus on Hugging Face Transformers as they offer a versatile and open-source platform with a large selection of pre-trained models.

Why Hugging Face?

- It’s free to use, and they offer many pre-trained models that can be fine-tuned.

- It’s highly documented, with numerous tutorials and a large developer community.

- You can access several different models that are fine-tuned for various tasks, including GPT-based models.

Step 2: Set Up Your Development Environment

To build a ChatGPT-like chatbot, you need to set up a Python environment and install the necessary libraries.

1. Install Python:

Ensure that Python 3.7 or higher is installed on your machine. You can download it from the official Python website.

2. Create and Activate a Virtual Environment:

A virtual environment is a way to manage dependencies separately for each project. In your terminal, create and activate the environment:

Activate it:

- Windows:

- Mac/Linux:

3. Install Required Libraries:

Use pip to install the required libraries. At a minimum, you’ll need PyTorch and Transformers:

These libraries allow you to work with pre-trained models, run NLP tasks, and build deep learning models.

Step 3: Training Your Chatbot

While you can use pre-trained models, it’s important to fine-tune them to your specific needs. Fine-tuning is the process of training a model on a specific dataset to make it more suitable for your use case.

1. Load Pre-trained Models:

For example, to load a GPT-2 model from Hugging Face, you can use the following code:

2. Train on Custom Datasets:

If you have a dataset, such as conversations or dialogues, you can fine-tune the model to adapt it to your specific domain. For example, if you want your chatbot to provide customer support, you can train it with relevant conversations.

Fine-tuning with Hugging Face can be done using scripts provided on the Hugging Face documentation.

Step 4: Fine-Tuning the Model

Fine-tuning involves updating the model’s weights by exposing it to your custom dataset. This allows your chatbot to learn how to interact in a way that aligns with your specific requirements.

1. Prepare Your Dataset:

To fine-tune your model, you’ll need a text dataset consisting of dialogue-based conversations. You can either use a pre-existing dataset (e.g., Cornell Movie Dialogues or Persona-Chat) or gather your own dataset.

2. Run Fine-Tuning Scripts:

Once you have your dataset ready, you can use Hugging Face’s fine-tuning scripts to train the model. Here’s an example of running the script to fine-tune GPT-2:

This script will fine-tune the GPT-2 model on the conversation data in conversations.txt.

3. Monitor the Fine-Tuning:

Fine-tuning can take a long time, depending on the size of your dataset and the computational power available. You can monitor the progress by using TensorBoard or other logging tools.

Step 5: Deploying Your Chatbot

After fine-tuning, you’ll want to deploy your chatbot so that others can interact with it. Here are some options for deployment:

1. Host on Cloud Platforms:

Hosting on AWS, Google Cloud, or Microsoft Azure ensures scalability and reliability. You can use GPU instances for fast inference and scaling.

2. Create a Web Interface:

Use a web framework like Flask or FastAPI to expose your model as a web service. Here’s an example using Flask: